Crowd Struck

#149 - What the worldwide outage means to cybersecurity and cyber insurance professionals

“Global Outage” and what we learn from it.

The format of this edition is going to be different from usual. This week the focus is on the biggest global outage in a long time.

The beginning

It was a routine day. I was returning from a business trip. I took a cab to the airport. I entered the airport, saw the long queues at the check-in counters, judged the people with huge check in bags, pooh-poohed the people who still believe in a physical boarding pass and breezed my way toward the security check.

Routine.

That's where the routine ended. I was abruptly stopped at the security gates by the burly security personnel who informed me that digital boarding passes are not working. Something's wrong with the computers and you have to go to the counter and get yourself a physical boarding pass if you wish to board a flight.

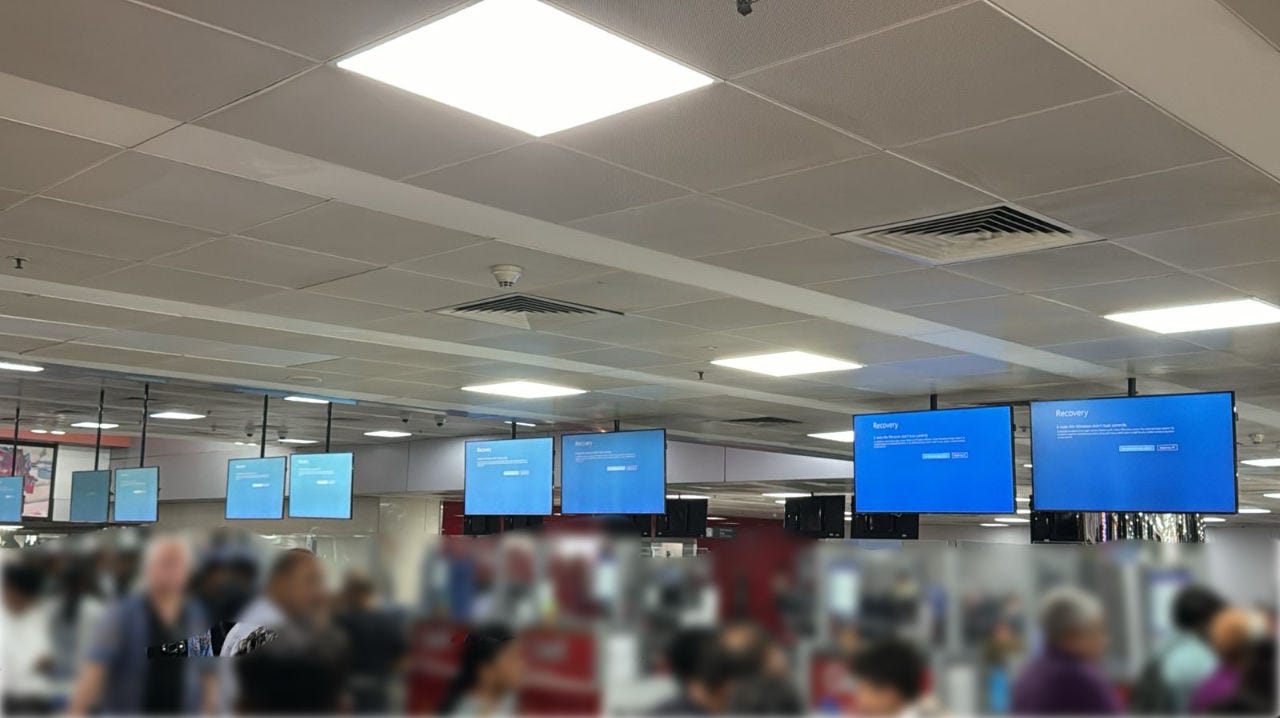

That was when I actually surfaced to look up and see what's happening. None of the displays at the airport were working. All of them showed the notorious Windows BSOD.

To cut a long story short, after a few benign enquiries, I stood in the queue for about two hours behind the good people who want to check in their baggage and want the comfort of a physically printed boarding pass. I had two hours to find out what happened.

I have, for the first time in my life, a hand written boarding pass, which I plan to use in all my business continuity trainings henceforth.

What happened?

The initial news was that Microsoft had an issue and that all Windows systems were down. Slowly, it emerged that it was an update pushed by CrowdStrike, a cybersecurity company, that was responsible for my standing for 2 hours in a check-in queue with a mobile boarding pass and no check-in luggage.

CrowdStrike is a cybersecurity company that operates in the 'Endpoint Security' space. That's cybersecurity geek talk for saying that it work with computers (desktops, laptops, servers, etc.).

CrowdStrike has multiple products in this space that, for simplicity, we can say are a type of anti virus software. For such software to work on any computer, CrowdStrike requires to install a piece of software called an 'agent' on that computer. This agent requires to have access to sensitive areas of the operating system and can do more things than a regular piece of software. This is because many malicious pieces of software affect the files of the operating system and the anti virus should have the permissions to stop that from happening. Whenever CrowdStrike identifies new types of attacks, or virus, they release an update that can help prevent that virus from infecting your computer. So far so good.

On the fateful day, CrowdStrike release a routine update. However, this update was different. The update was inadvertently trying to access a part of the operating system that Windows is quite protective about. It blocked the update, and that caused the CrowdStrike program to crash. It also caused Windows to restart. On restart, the CrowdStrike agent again tried to access the same protected block of memory and this started what is known as a 'reboot loop' - a computer that constantly restarts.

CrowdStrike identified the bug and released a fix quickly. There were operational challenges on how to apply the bug fix, considering that systems are in a reboot loop, but these were rectified. In a day or so, the world went back to being normal.

The CrowdStrike CEO released an official statement on the 19th of July with the following content:

" The outage was caused by a defect found in a Falcon content update for Windows hosts. Mac and Linux hosts are not impacted. This was not a cyberattack.

We are working closely with impacted customers and partners to ensure that all systems are restored, so you can deliver the services your customers rely on.

CrowdStrike is operating normally, and this issue does not affect our Falcon platform systems. There is no impact to any protection if the Falcon sensor is installed. Falcon Complete and Falcon OverWatch services are not disrupted."

The cybersecurity implications

TPRM?

You will find a lot of buzz on the internet about the process of third party risk management or TPRM. Many cybersecurity professionals blame this on a lapse of managing risks from third parties. CrowdStrike is a third party to most clients. I believe that such a thought process completely misses the bus.

Third party risk management, while important, is not the root cause of what happened. Any organisation, even with a very mature third party risk management program would not identify an endpoint security vendor as a key third party - it does not make sense. If you add that now, you should be adding Microsoft as a key third party as you are using Windows systems on endpoints as well. Also, what mitigations would you consider? A contractual obligation at the most. How detailed a risk assessment would you do of your third party to be able to reach the scenario of what happened?

It's actually BCMS

The actual lapse, is a sound BCP. A business continuity plan is supposed to handle large scale outages for which implementing a control is difficult and/ or expensive. How many business continuity plans actually address 'endpoint outage'? To be fair, over the years, I have created multiple BCP setups at varying degrees of complexity and never for once have I identified or tested a scenario where endpoints are not available. Oops.

A mitigation for this is having an environment with heterogenous endpoints - some Windows, some Macs and some Linux. The cost benefit analysis of managing a complex environment might just outweigh the cost of 2 days outage.

CrowdStrike did everything they should. Microsoft did everything they should. IT departments in companies did everything they should. Business departments did not.

Standing in the long queue, I observed the people at the counter. They suddenly had to issue boarding passes by hand. Somewhere, deep in the BCP strategy document, there is probably a line that reads "Issue Manual Boarding Passes" to the scenario of "Network Outage". Never spoken about. Never tested. Even during a desktop test. The people at the counter were lost. They were never trained on what needs to be checked to issue boarding passes manually. They relied on a few superhuman supervisors who were running between counters and solving queries.

The number of counters remained the same as when it took a fifth of the time to process a passenger. No new teams were deployed. They were probably not on standby. Neither were there clear indicators to passengers for what had to be done - the displays were not working. Try finding a whiteboard at a modern airport.

Finally, I was close to the counter. The airline staff, realising the challenges, had identified a couple of people to open a new counter. I was the second in line at the new counter, that would double the speed of passenger processing. The BCP seemed to be working - till the time the lady at the counter asked the lady in front of me - "Ma'am, do you have a pen?". That's when I realised the details that would be required to make a strategy of "Print Boarding Passes manually" into an effective and working plan.

For many businesses, the most important action will be to do nothing. This is no actionable to learn from. They don't have a business impact for a day of outage. Just switch off your machines, go on the long weekend and come back to everything working fine. Deciding not to do anything for a 2 day outage is a genuine business continuity strategy.

What about patch testing, then?

This is something that CrowdStrike has to investigate and identify the root cause of this lapse. It's not everyday that a kernel level program (or close to kernel access) get rolled out with an obvious bug.

It's also a wake up call for organisations that deploy large scale patches across the globe. Are there robust testing and phased roll-out procedures? Here are some things that an organisation should check if they have in place:

What patch testing procedures do you have?

What roll back procedures do you have?

What incident response processes do you have?

Do you have a phased rollout strategy? If yes, what checks are performed from one phase to another?

The cyber insurance perspective

Having cyber insurance does not automatically make you eligible to a cyber claim for this instance. In your cyber policy, you will have a section that cover Business Interruption due to a cyber breach. You will have to check your policy details to understand if this outage is a breach? In most cases, it would not.

If you find that your policy does consider a failed patch update as a payable claim, then you have to check if you have any deductible or excess on the cyber policy. A deductible is the amount of claim below which an insurer will not pay. You will find that this ranges from 1 day of outage to 3 days, depending on the policy and your negotiated terms. Hence, you might not get to claim anything due to this breach. Of course, this also depends on the policy that you have.

If you are a cyber insurer, this is the time to understand the accumulation you carry for Windows systems that have CrowdStrike. You might not be able to calculate accumulation for each and every piece of software installed, but you will be able to calculate accumulation for Windows / Linux or Mac systems. This should help in your accumulation computations.