Blake Lemoine, an engineer at Google kicked up a storm when he alleged that the AI platform that Google was developing - LaMDA, was sentient.

In this edition of LongReads, we delve into this and try to figure out how it applies to cybersecurity professionals like us. Hang in for a bit, because this story gets technical as well as philosophical.

Know someone who might find this useful? Share it with them:

What is LaMDA

LaMDA stands for “Language Model for Dialogue Applications”. It is an Artificially Intelligent ‘language model’ - a model that works with words. It is based on a neural network architecture, but this is getting too technical for us mere mortals (cybersecurity professionals). To simplify to a fault, LaMDA is an AI model that is able to predict words and sentences by analysing vast quantities of previous words and predicting what word would follow a previous set of words.

For example, if I ask you “Nice Day! It is bright and _______”. You know that the most probably word to fill the blank is “sunny”. It could be “cheery”, but the probability is less. However, it is definitely not going to be “rainy” or '“cloudy”. How do you know? From past sentences, you can infer this. In essence, this is what LaMDA does.

Google tried to make LaMDA better by trying to make the responses that LaMDA gives more ‘satisfying’ and not merely ‘sensible’ by introducing specifics. Example?

“I ran a Marathon”

Sensible Response: That’s nice!

Satisfying Response: Great! I remember running one in summer. It was hot! How did you handle the heat?

Now, that is an AI that you can hold long evening conversations with, even share a beer or two!

What is Artificial Intelligence (AI)?

Artificial intelligence is computer programs that learn from inputs and adopt accordingly. Something that only living things do so far!

When we say ‘intelligent’ we look at psychological skills such as - perception, association, prediction, planning, motor controls, etc. (A definition that I liked from the book Artificial Intelligence - A very short introduction by Margaret A. Boden)

In fact, AI uses algorithms that mimics human behaviour - like the genetic algorithm and neural networks. The idea is that, to build something that mimics human behaviour, use learnings from humanity and convert it into algorithms and then pray that the algorithm behaves like a human being.

Once the algorithm is deployed, how do we test to see it is behaving like a human being? At least enough to confuse a real human being for a short while? This is a problem that scientists have been wondering for a long time.

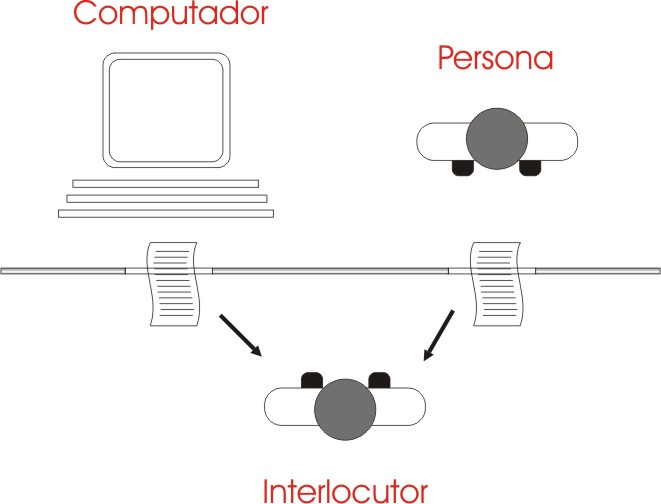

The Turing Test, was designed by Alan Turing in the 1950s. As per Wikipedia:

The "standard interpretation" of the Turing test, in which player C, the interrogator, is given the task of trying to determine which player – A or B – is a computer and which is a human. The interrogator is limited to using the responses to written questions to make the determination.

Modern chatbots sometimes beat the Turing test. Since it is not a very scientific test, there are many different tests and many different results.

In fact, sometimes I feel that we should have a Turing test for humans as well. There are some so called humans that I communicate with where I am not sure. 😈

Definition of Artificial Intelligence

Artificial Intelligence is a too broad a term to define. Hence, it is split further into the following:

Narrow AI

Narrow AI or weak AI is an artificial intelligence that does one job perfectly well. It can be very smart in that job, but would not be able to do any other. Remember the joke - “A computer once beat be in chess, but it was no match to me in kickboxing.” ? - a classic example of narrow AI.

Narrow AI is used ubiquitously today. From virtual assistants (Siri, Alexa, Cortana, etc.) to self driving cars and robots doing surgery, narrow AI is very common.

General AI

When we speak of sentient AI and the fear that a robot has come ‘alive’, we mean general AI. In general AI, a robot can do all the intellectual thinking of a human being. This is the point we say that the AI is sentient - or alive!

Super AI

Super AI is AI which has crossed the ‘AI singularity’ where the AI is self aware and is smarter than what a human being can be. All doomsday movies about AI fit here.

There is another, more structured classification for AI, although slightly more complex. A good article on the different types of AI can be found here.

Reactive Machines

Limited Memory

Theory of Mind

Self Aware

The mind map below summarises the different types of AI.

The ‘AI singularity’ and philosophy

The singularity is the point where AI achieves sentience - or self awareness. What exactly do we mean by sentience of self awareness? We now move from the realm of technology to the realm of philosophy and spirituality.

The question appears very simplistic. After all, everyone knows who they are. But, when you start thinking about an AI being self aware, you realise that to answer the question of AI self awareness, you need to define self awareness itself and to define self awareness, you need to define ‘self’.

After all, it is the self that the AI is aware of.

Here is a very interesting lecture on ‘Who Am I’ based on the ancient Indian text - the Mandukya Upanishad.

The problem of self is not just the realm of Indian monks, but also modern philosophers. In fact, philosophers have given it a name as well - the hard problem of consciousness. How and why do we have ‘experiences’ that define our ‘consciousness’?

When you look at a flower, you instinctively know it is a flower. But when you try to quantify what makes a flower a flower, you have really think. When you are ‘training’ AI/ ML algorithms to learn about flowers, you ‘show’ the algorithm various types of flowers… and pray that it will be able to understand what a flower ‘is’.

Without a proper definition of consciousness, it is difficult to know if any AI is sentient, because we do not know if we are sentient ourselves! Or that what we define as sentience is actually what is.

So, have we reached this singularity?

Many articles have refuted this claim and said that LaMDA is not sentient. Here is one on CNN. Here is another one on the Economist which tried asking GPT3 various weird questions and got really weird responses. I really liked this response:

D&D (these are the people who conducted the test): How many parts will the Andromeda galaxy break into if a grain of salt is dropped on it?

gpt-3 (the AI): The Andromeda galaxy will break into an infinite number of parts if a grain of salt is dropped on it.

The popular YouTube channel Computerphile too has spoken that LaMDA is not sentient.

So, what next?

As Google, the creators of LaMDA, say on their blog:

Language might be one of humanity’s greatest tools, but like all tools it can be misused. Models trained on language can propagate that misuse — for instance, by internalizing biases, mirroring hateful speech, or replicating misleading information. And even when the language it’s trained on is carefully vetted, the model itself can still be put to ill use.

We can leave the question of what is sentience to the philosophers, but we cannot leave the current problem to them.

AI might not be sentient, but it might seem sentient. Which in fact is worse. Not being able to tell if the AI has acquired humanness or not. Read a very interesting post about this on The Wired here.

AI and ML are already causing issues. Check out the documentary ‘Coded Bias’ on Netflix. Or read a review of the same here. Seemingly innocuous algorithms - when left to learn for themselves - can exacerbate minor biases that the coders or the training data set has.

We are at a point where the debate about AI policies and rules becomes more important than the largely philosophical and technical debate about AI sentience. And that is good enough for policy decisions to be made.

As infosec professionals, we have a moral responsibility to ensure that the AI technology that our organisation is working on has the right kind of data and the right kind of learning. AI poisoning is a real threat vector and needs to be identified in our threat models and risk assessments.

To conclude

AI might or might not get sentient, but it is here to stay. More and more algorithms are being built around AI. The following needs to be done:

The ethics of AI should be explored by philosophers

The rules and framework for AI operations should be explored by lawmakers

The risks of AI should be explored by cybersecurity professionals

CyberInsights LongReads is a part of CyberInsights and delves into one topic in detail each month. If you like what you are reading, you can subscribe to get LongReads in your email. Just remember to check your spam in case you do not receive these mails.

Excellent piece!