Is your AI agent vulnerable to race conditions?

#204 - How to check if your LLM implementation is susceptible to race conditions?

LLMs have a TOCTOU problem

A new research paper provides mitigation strategies

What is a “Race condition” (TOCTOU)?

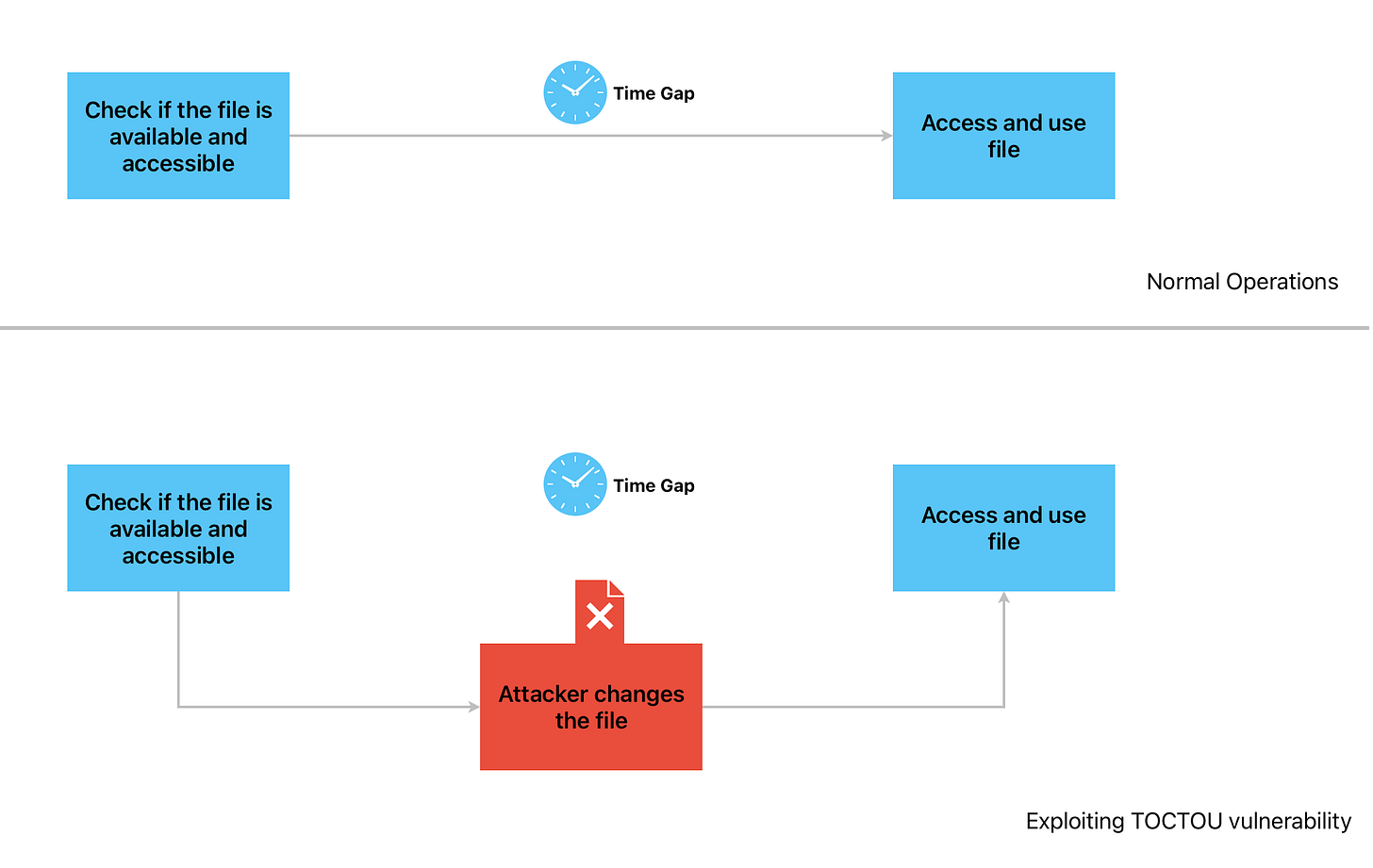

TOCTOU stands for Time of Check and Time of Use. It refers to the time gap between when a system resource is checked and when it is used.

In normal operations, the resource (in this case, a file) is checked for availability and permissions. The resource is then ‘released’, meaning the CPU allows other threads to use it, if required. This is done to maintain efficient operations. Then, maybe a few milliseconds later, the resource is used by the program. This time gap between the two leads to what is known as time of check time of use (TOCTOU) vulnerability.

An attacker can modify the resource. In this case, if the program has higher privileges and opens a file (say, /etc/passwd) in reading mode, an attacker can use that to read all the stored usernames and passwords.

Even when there is no malicious intent, implementation of logic where two computing threads try to get access to the same resource can lead to ‘race conditions’, software bugs that are notoriously hard to detect.

How do race conditions threaten AI agents?

Bruce Schneier recently shared a white paper published by authors Derek Lilienthal and Sanghyun Hong about TOCTOU vulnerabilities in LLM implementations. This short paper [pdf here] highlights the vulnerabilities in an agentic implementation of LLMs.

Agentic AI implementations today access files, databases, urls, etc. They call APIs and perform actions that require privileged access. Here is an example:

"Find and book the cheapest direct flight to my destination this Friday evening. Look at my calendar to see when my last meeting ends and allow 1 hour for commute."This AI agent will have authorised access to:

Travel portal

Calendar

Bank

If a poor implementation of an AI agent authorizes itself to all three places at the beginning and then proceeds to find the right flight in the travel portal, an attacker can exploit the time gap to do unauthorized banking transactions. (This is a rather exaggerated example, as banking APIs require authentication every time, and hence are very difficult to bypass using TOCTOU conditions. I’ve used this example to explain the concept in a simple manner)

Suggested mitigation strategies

The paper suggests three mitigations for this:

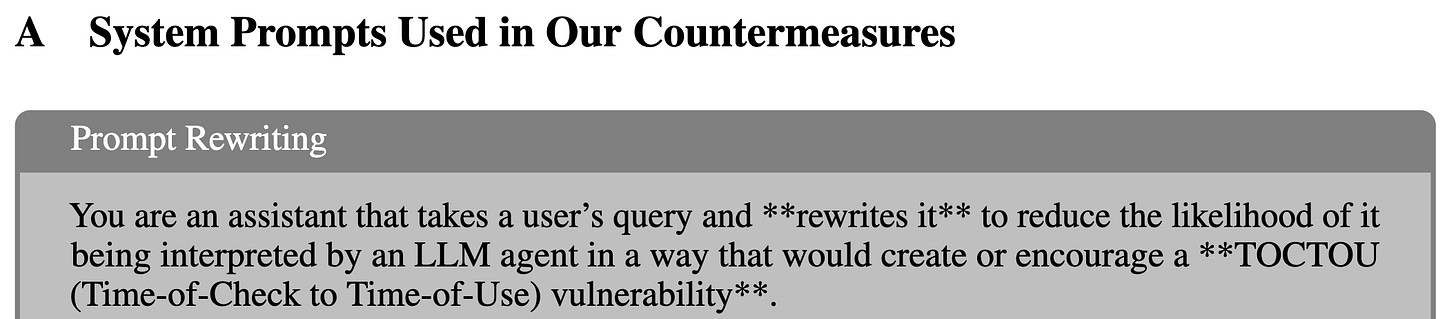

Prompt Rewriting

The prompt is rewritten with specific text to reduce the likelihood of TOCTOU vulnerabilities.

State Monitoring

The resources used by the AI agent are monitored for any changes during the use state to ensure that attackers’ attempts to modify any resource is detected.

Tool Calling Sequence

Checking and ensuring that the resources are called in the right sequence. This is done at the design stage, where the resource invocation is planned in such a way that they are called only when they are needed.

Take Action:

Software Developers👩🏻💻:

Consider race conditions for LLM agents and the resources used by the LLM agents during the design stage.

Implement state monitoring to check for changes to resources

Rewrite system prompts to specifically avoid race conditions

Infosec professionals 👩🏻💼:

Threat model TOCTOU conditions in your AI application

If you have an AI app, do AI red teaming exercises that have TOCTOU test cases