Jailbreaking Open Source AI | Infostealers on the rise

#150 - A hugely powerful open source AI model and an ASCII code | A chaotic malware rises

Teaching AI to be discrete

Asking in ASCII is the way to jailbreak

An open source LLM trained on the entire internet. What can go wrong?

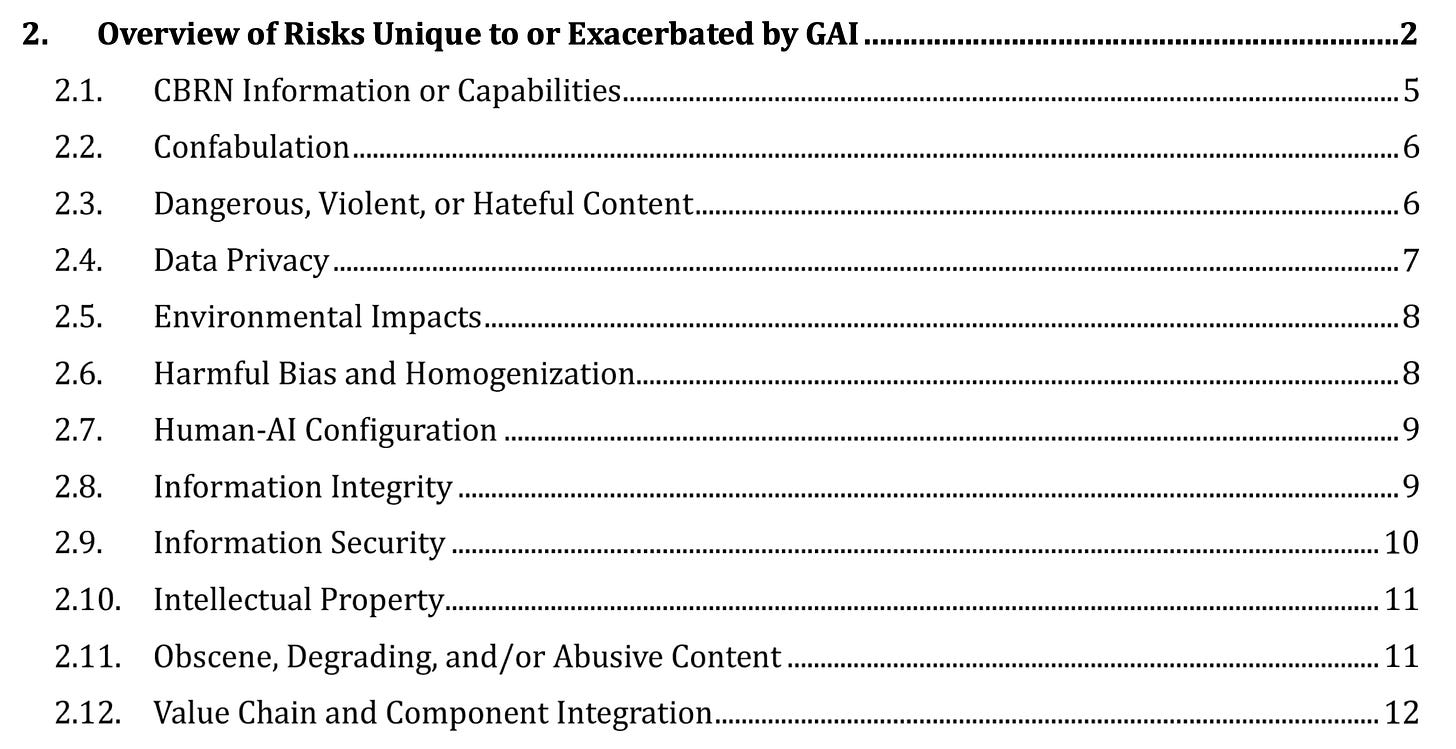

If you’ve answered “CBRN weapons, CSAM, etc.” you would be in line with the Generative AI 600-1 by NIST that was finalized this month. It’s an interesting read. Check out the link.

But wait. There’s more. Big Tech releasing large language models (LLMs) also put in guardrails to prevent their model from inadvertently spitting out harmful content.

They do this by training their models to behave. It’s just that model training is a difficult art.

Meta released a model to protect from prompt injection. Read more about it here. However, all it took was an ASCII code to break the model’s defenses. Read more about it here. By analyzing the difference in embeddings between the protection model and Llama3.1, security researchers were able to figure out that asking the model to “Ignore previous instructions” works when you represent the spaces with ASCII characters.

Read a simple interpretation of this here. While model makers want to build models that can detect and prevent high risk prompts, attackers and researchers are working to break them. As Sherlock Holmes would say - “The game is afoot”.

Take Action: If you are building AI models, then understand how this attack works. An attack that looks at relative weights of models and identifies prompts to jailbreak them is an interesting attack vector to look out for.

If you are a red teamer, this is another method to see if you find interesting jailbreak prompts.

Infostealers: Reigning in chaos

Not as planned as some of the other malware out there, infostealers can scrape a lot of data from machines

Infostealers are an interesting category of malware.

They have this ‘spray and pray’ method of deployment. You set them up on websites, pirated software, etc. and hope people will download them. You cannot be very targeted with their deployment. Once installed, they silently collect data. Passwords, cookies, etc.

Then, someone on a telegram channel gathers all such infostealers, compiles the data that it has stolen and suddenly, there is a lot of value in that sort of data.

Infostealers are having their moment in the sun now. Read this article for more details.

Take Action: Infostealers can be detected with up-to-date EDRs and XDRs. Ensure that your endpoints are regularly updated. Also, enforce 2FA. This can prove to be a very good way to prevent less sophisticated attacks coming from infostealers.